It sounds backwards: more rules mean faster adoption. But the data says it’s true.

Our 2025 State of AI in Procurement report found a few industries that manage to reach the highest rates of AI deployment alongside the most extensive rules, roles, and processes that guide AI evaluation, approvals, and scaling.

We’re going to break down the surprising link between structure and scale in procurement AI deployments and reveal how leading industries implement tools intentionally.

Actually, more rules can lead to more AI deployment

When we began to sift through the data from our comprehensive independent survey of 800+ procurement professionals, a clear pattern emerged. Industries are taking very different paths to adopting AI..

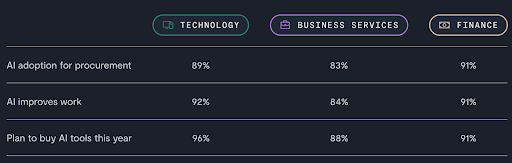

That in and of itself isn’t too surprising, because new technologies always have early adopters and later converts. However, the industries that have rocketed AI deployment to near and over 90% adoption actually have more, not less, governance in place than other industries. These aren’t teams that are jumping headfirst into new technology without careful consideration.

Finance, for example, leads with 91% procurement AI adoption despite (or because of) its guardrails. Nearly half of finance teams operate under strict IT guidelines for buying AI tools, and another 39% under extensive leadership-driven ones. Looking forward, 73% of procurement teams in the finance industry plan to purchase AI tools for both their own department and others. For reference, that’s the highest cross-functional adoption rate of any industry. They’re not just dabbling; they’re scaling.

Similar patterns emerge in technology and business services, too. 74% of tech companies have extensive buying guidelines from IT or company leadership, and procurement teams have some of the highest adoption rates across AI use cases like workflow automation and data analysis.

In business services, 69% of organizations require legal and IT approval of AI tools, and procurement teams in this industry lead adoption of AI use cases like conducting spend analysis and identifying cost-saving opportunities.

So why do rules act like an accelerator instead of a brake? It comes down to trust, clarity, and collaboration.

How structure speeds up AI adoption

Guardrails don’t choke adoption; they give teams the confidence and clarity to move from one-off experiments to enterprise-wide scale. With governance in place, teams know the boundaries, leaders understand the risks, and adoption can move faster.

Here’s why procurement AI deployments need governance:

- Establish much-needed trust. 24% of respondents across industries say security concerns have gotten in the way of AI adoption at their company. And yet it seems that governance and guardrails build confidence. Industries with some of the most extensive buying guidelines are the most comfortable with using the tools—56% of procurement professionals in the technology industry would be comfortable using fully autonomous AI.

- Eliminate decision paralysis. In a market flooded with AI vendors, governance gives organizations a shared playbook. They know what criteria matter, what thresholds require review, and who signs off. That structure keeps adoption from stalling in endless debate.

- Encourage collaboration. AI adoption spans IT, legal, procurement, and business units. Without governance, decisions get siloed or stalled. Structured approval flows force coordination. Tech again offers an example: 54% of companies require both legal and IT approval for AI purchases—a guardrail that has helped them achieve some of the highest usage rates of AI tools across procurement tasks.

Steps to build structure (not bureaucracy)

When done right, governance reduces risk, builds trust, and gives teams clarity so pilots don’t stall. Rules for rule’s sake can quickly turn into red tape with so many voices involved, though. Here’s how to thoughtfully build AI deployment governance.

1. Document what you already have

Begin where you are. Map out today’s approval flows, decision gates, and governance structure. Identify bottlenecks, ambiguity in roles, and discretionary “shadow” pathways that bypass formal oversight. This becomes your “baseline map.”

Questions to ask:

- Where are approvals consistently delayed?

- Who owns authority at different spend or risk thresholds?

- Which decisions bypass formal oversight?

- How do you manage contract value leakage?

“Procurement is mandated by policy, with certain thresholds allowing discretionary spend. Policy gives us governance leverage even where adoption is inconsistent.”

– VP of Strategic Sourcing, Leading Global Hospitality Brand

2. Stand up a cross-functional AI governance committee

AI adoption spans IT, legal, compliance, procurement, and business units. An AI governance committee prevents silos and creates a single table for balancing risk and speed.

Steps to take:

- Bring in diverse stakeholders (legal, IT, procurement, compliance, operations)

- Define the committee’s charter, roles, and escalation paths

- Set risk tiers and review criteria

- Establish a regular review cadence for AI proposals

We are in the wild west of artificial intelligence right now. There’s a ton of excitement, tons of people rushing into this amazing opportunity for productivity. But it’s a lawless place. There are no common standards. There are tons of risks – from data security, to content proliferation to who knows maybe the end of civilization!

Just because our governments and experts haven’t established regulations around AI doesn’t mean we don’t need them. On the contrary we need them more now than we ever – because the latest generation of AI is so powerful that if we wait for the law to catch up, we’re going to miss out on a massive opportunity to get faster, smarter, and more effective at everything we do.

John FiedlerChief Information Security Officer, Ironclad

3. Define shared evaluation criteria

Once you have visibility, create a consistent rubric for evaluating AI tools. This reference point eliminates decision paralysis by giving stakeholders a framework for quick, confident calls.

Criteria to consider:

- Data security and privacy protections

- Explainability, fairness, and bias risk

- Business impact & ROI

- Escalation thresholds (e.g., “If spend > $X, escalate to committee”)

- Regulatory and compliance requirements

Each department and stakeholder can prepare for this step by learning about their role-specific considerations for AI tools:

- GenAI for Enterprise Procurement Teams

- AI, IT & Contracts: Everything You Need to Know

- AI for Legal Teams: The No-Nonsense Guide

4. Pilot, learn, refine

Governance doesn’t have to be perfect to start. Pilot projects act as testbeds for your framework, surfacing gaps in criteria, approval flows, or accountability.

Tips for piloting:

- Choose non-critical but real use cases (we cover the most impactful use cases in our report)

- Document delays and decision points

- Collect feedback from both users and reviewers

- Adjust thresholds or criteria based on results

5. Continuously monitor and evolve

Governance isn’t one-and-done. As AI evolves, so do the risks. Build in regular reviews to monitor adoption, security incidents, and business impact. Adjust policies and oversight accordingly.

Questions to ask:

- Are our criteria still aligned with the latest risks (e.g., data privacy, third-party models)?

- Do we need to recalibrate thresholds or escalation paths?

- How often should we audit AI use across the business?

See how procurement teams are actually using AI

The industries that lead in procurement AI don’t scale because they gamble. They scale because they govern. Guardrails give teams the trust, clarity, and collaboration needed to move fast without breaking things.

Governance is just one of the ways procurement teams are making AI work for them. Get the State of AI in Procurement report to see the full picture about how teams are actually using AI.ophisticated applications—they’re using the applications that match their current data infrastructure, organizational readiness, and most pressing KPIs.

Ironclad is not a law firm, and this post does not constitute or contain legal advice. To evaluate the accuracy, sufficiency, or reliability of the ideas and guidance reflected here, or the applicability of these materials to your business, you should consult with a licensed attorney. Use of and access to any of the resources contained within Ironclad’s site do not create an attorney-client relationship between the user and Ironclad.